Text Generation

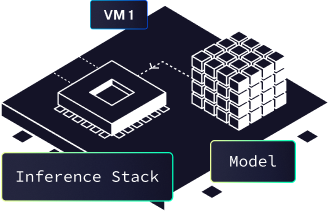

Text generation is supported on the network. As explained in the architecture page, inference takes place in virtual machines.

We are providing multiple VMs, with an inference stack that can change with time. It means the API is subject to change on newer models.

Available models

| Model | Base | API type | Prompt format | Base URL | Completion URL |

|---|---|---|---|---|---|

| NeuralBeagle 7B | Mistral | Llama-like | ChatML | API Url | Completion Url |

| NeuralBeagle 7B | Mistral | OpenAI-compatible | ChatML | API Base Url | Completion Url |

| Mixtral Instruct 8x7B MoE | Mixtral | Llama-like | ChatML or Alpaca Instruct | API Url | Completion Url |

| DeepSeek Coder 6.7B | DeepSeek | Llama-like | Alpaca Instruct | API Url | Completion Url |

API details

Please see the according API documentation based on the model of your choice:

Prompting formats

Each mode has its own formatting. Knowing which format you should provide for a specific model will help getting better results out of it. Please refer to the available models table to know which format is the best for your model.